Computational Text

Procedural generation can be used to create form in almost any media: image, video, animation, sound, sculpture. This chapter introduces some tactics for procedurally generating text, which may be the media most often computationally generated. Web pages are built out of text, and most of the time this text is computationally generated at least to some degree.

Consider how a Google search result page is built. Google uses programs to crawl the web, collecting a database of information about every page. When you perform a search, another program searches this database for relevant information. This information is then carefully excerpted, summarized, formatted, and collated. The resulting web page of results is built on the fly and sent to your browser for you to read.

Social media sites like Facebook and Twitter are software systems for collecting and sharing user-created content, largely text. Even websites primarily concerned with other media, like YouTube and Instagram, must generate HTML text to showcase their videos and images.

The Imitation Game

Search engines and social media sites are certainly procedurally generating text, but for the most part they are not generating content. Few would argue that these sites are being truly creative. In fact, many have argued that computers are not capable of true creativity at all.

Not until a machine can write a sonnet or compose a concerto because of thoughts and emotions felt, and not by the chance fall of symbols, could we agree that machine equals brain—that is, not only write it but know that it had written it. No mechanism could feel (and not merely artificially signal, an easy contrivance) pleasure at its successes, grief when its valves fuse, be warmed by flattery, be made miserable by its mistakes, be charmed by sex, be angry or depressed when it cannot get what it wants.

Geoffrey Jefferson

In 1950, Alan Turing directly addressed this argument and several others in Computing Machinery and Intelligence, in which he considered the question “Can machines think?” Rather than answering the question directly, Turing proposes an imitation game, often referred to as the Turing Test, which challenges a machine to imitate a human over “a teleprinter communicating between two rooms”. Turing asks whether a machine could do well enough that a human interrogator would be unable to tell such a machine from an actual human. He argues that such a test would actually be harder than needed to prove the machine was thinking—after all, a human can certainly think, but could not convincingly imitate a computer.

Generating Language

Creativity can be expressed in many mediums, but—perhaps as a consequence of the Turing Test using verbal communication—artificial creativity is often explored in the context of natural-language text generation. This chapter introduces three common and accessible text generation tactics: string templating, Markov chains, and context-free grammars.

These techniques focus on syntax—the patterns and structure of language—without much concern for semantics—the underlying meaning expressed. They tend to result in text that is somewhat grammatical but mostly nonsensical. Natural-language processing and natural-language generation are areas of active research with numerous sub-fields including automatic summarization, translation, question answering, and sentiment analysis. Much of this research is focused on semantics, knowledge, and understanding and often approaches these problems with machine learning.

Generating other Media via Text

Generating text can be a step in the process for generating form in other media. The structure of a webpage is defined in HTML. The layout and style is defined in CSS. SVG is a popular format for defining vector images. The ABC and JAM formats represent music. Three-dimensional objects can be represented in OBJ files. All of these formats are plain text files.

You can think about these media in terms of the text files that represent them. This provides a fundamentally different point of view and can lead to novel approaches for generating form.

Programs that Write Programs

Most programming languages are themselves text-based. Programs that generate programs are common and important computing tools. Compilers are programs that rewrite code from one language to another. GUI coding environments like Blocky and MakeCode generate corresponding JavaScript. Interface builders like the one in Xcode generate code scaffolding which can be added to manually. Of course, programs that write programs can also have more esoteric functions. A Quine is a program that generates a copy of itself.

Examples of Computational Text

Content Generators

Subreddit Simulator Reddit The Subreddit Simulator is a subreddit populated entirely by bots using Markov Chains trained on posts made across Reddit. This post explains the sub more thoroughly.

NaNoGenMo 2018 Competition Annual competition to procedurally generate a 50,000 word novel.

Indie Game Generator Tool An instant pitch for your next game project.

Pentametron Twitter Bot A Twitter bot that pairs up tweets to create couplets in iambic pentameter.

Procedural Journalism

The Best and Worst Places to Grow Up New York Times This fantastic NYT piece looks at how where someone grows up impacts income. To make the information relatable the story adapts itself based on the location of the reader.

Interactive Journalism Collection A curated collection of “outstanding examples of visual and interactive journalism”.

The little girl/boy who lost her/his name New York Times NYT covers a custom, made-to-order children’s book.

Associated Press Media Outlet The Associated Press procedurally generates college sports coverage and corporate earnings reports.

Bots

ELIZA wikipedia.org In 1964 Joseph Weizenbaum created ELIZA, a therapist chatbot that you can still talk to today.

Tay wikipedia.org Tay was an AI Twitter bot created by Microsoft and launched on March 23, 2016. Less than a day later, it was shut down after posting many controversial, inflammatory, and racist tweets. Read more about it on The Verge.

String Templates

String templating is a basic but powerful tool for building text procedurally. If you have ever completed a Mad Lib fill-in-the-blank story, you’ve worked with the basic idea of string templates.

Make an Amendment!

This demo populates a template with the words you provide to generate a new constitutional amendment.

view source

Since generating HTML strings is such a common problem in web development, there are many javascript libraries for working with string templates including Mustache, Handlebars, doT, Underscore, and Pug/Jade.

Starting with ES6, JavaScript has native support for string templates via template literals. Template literals are now widely supported in browsers without the need for a preprocessor.

A JavaScript template literal is a string enclosed in back-ticks:

`I am a ${noun}!`;The literal above has one placeholder: ${noun}. The content of the placeholder is evaluated as a javascript expression and the result is inserted into the string.

let day = "Monday";

console.log(`I ate ${2 * 4} apples on ${day}!`);

// I ate 8 apples on Monday!Book Title

This example generates a book title and subtitle by populating string templates with words randomly chosen from a curated list.

Life Expectancy

This example uses string templates and data lookups to create personalized text.

Markov Chains

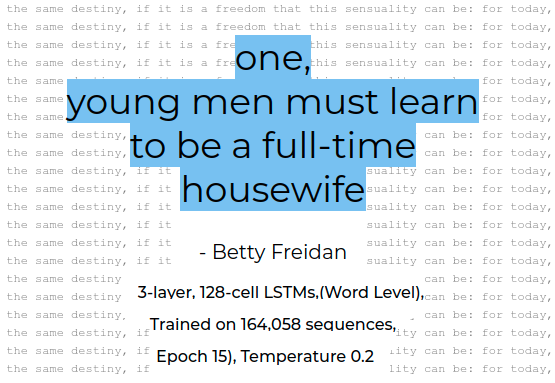

Markov chains produce sequences by choosing each item based on the previous item and a table of weighted options. This table can be trained on examples, allowing Markov chains to mimic different text styles. Markov chains are a useful tool for procedurally generating anything that can be represented as a sequence, including text, music, or events.

Markov Chain

Explore the Markov chain algorithm with paper and pencil using this worksheet.

Build the Model

The right side of the worksheet lists every word that occurs in the Dr. Seuss poem. These are the “keys”. Find every occurrence of each key in the poem. Write the following word in the corresponding box. Do not skip repeats.

Generate Text

Choose a random word from the keys. Write it down. Choose a word at random from the corresponding box, and write it down. Continue this process, choosing each word based on the previous one.

Markov Text Generator

This example creates a Markov chain model based on provided text and then generates text based on that model.

Context-Free Grammars

Consider this html excerpt:

<div><b>Hello, World!</div></b>This isn’t proper html. The b tag should be closed before the div tag.

<div><b>Hello, World!</b></div>HTML is text, and it can be represented by a text string. But not all text strings are valid HTML. HTML is a formal language and it has a formal grammar, a set of rules that define which sequences of characters are valid. Only strings that meet those rules can be properly understood by code that expects HTML.

Context-free grammars are a subtype of formal grammars that are useful for generating text. A context-free grammar is described as a set of replacement rules. Each rule represents a legal replacement of one symbol with zero, one, or multiple other symbols. In a context-free grammar, the rules don’t consider the symbols before or after—the context around—the symbol being replaced.

Story Teller

The following example uses a context-free grammar to generate a short story. This example uses Tracery, created by Kate Compton. The Cheap Bots, Done Quick service lets you make Twitterbots very quickly using Tracery. You can learn more about Tracery by watching this talk (16:16) by Kate Compton.

Here is the same generator, created using RiTa instead of Tracery. RiTa is a javascript library for working with natural language. It includes a context-free grammar parser, a Markov chain generator, and other natural language tools.

Marquee Maker

HTML is text, so CFGs can generate HTML!

Coding Challenges

Explore this chapter’s example code by completing the following challenges.

Modify the Example Above

- Change the value of

personto a different name.• - Change the value of

numberto a random integer between 0 and 100.• - Add a second sentence to the template with two new placeholders.

• - Add an ‘s’ to ‘computer’ when

numberis not 1.• - Add this to your template:

The word "${word}" has ${letterCount} letters.• - Create a variable for

wordand set its value to a random word from a small list.•• - Create a variable for

letterCountand set its value to the number of letters inword.•• - Instead of using

console.log()to show the expanded template, inject the result into the webpage.••• - Alter the program so that the dynamic parts of the template appear in bold.

•••

Keep Sketching!

Sketch

Explore generating and displaying text.

Challenge: It Was a Dark and Stormy Night

Make a program that generates bad short stories that start with “It was a dark and stormy night.”

The story must:

- be bad.

- be short.

- start with “It was a dark and stormy night.”

- be generated by code: consider using templates, Tracery, Markov Chains, or anything you want.

Ideally, your story should:

- be grammatically perfect.

- make sense, with consistent characters, relationships, and actions.

- follow a dark and stormy theme.

- be of the horror or mystery genre.

- have a clear structure: beginning (exposition), middle (rising action/conflict), and end (resolution).

Explore

Twine Tool Open-source tool for telling interactive, nonlinear stories.

Context-Free Grammar The Coding Train Daniel Shiffman talks about context-free grammars, Tracery, and RiTa.

Context-Free Challenge The Coding Train Daniel Shiffman builds a small context-free grammar from scratch.

L-Systems Wikipedia L-systems are a type of formal grammar often used in procedural graphics generation.

Insignificant Little Vermin Game This game, written by Filip Hracek, renders an open-world RPG game in writing.

donjon Collection Large collection of random text and dungeon generators for D&D and tabletop roleplaying.