Preamble

- Kraftwerk: Pocket Calculator

- ElectroBOOM: ESD Safety

- Human Benchmark

- Mystery Melody

- An Audio Illusion

Carefully Arranged Sand and Lightning

Light

We see by detecting electromagnetic radiation that reaches our eyes. The information we gather by sight is only a small amount of the information present in the EM field.

We can only see the EM radiation with wavelengths from around 390nm to 700nm that happens enter our pupils. We have limited ability to discern frequencies even in the visible range, and we can’t* detect light polarization.

Even with these constraints, we are able to glean a highly detailed understanding of our surroundings. Vision is perhaps our most powerful sense. We are especially good at building a spacial understanding of our environment based based on light.

Videos

- UV Imaging: How the Sun Sees You

- IR Imaging: Thermal Imaging and It’s Applications

- Richard Feynman on Light

Emissive Color

- Sunlight contains electromagnetic radiation in many wavelengths.

- An individual LED provides electromagnetic radiation at a very specific wavelength.

- An LED computer display has LEDs of three colors. It can vary the intensity of those three colors, but can’t provide electromagnetic radiation in the wavelengths between them.

- We perceive the mix of the three colors as a single color.

- Red, green, and blue LEDs are used because they correspond with our biology.

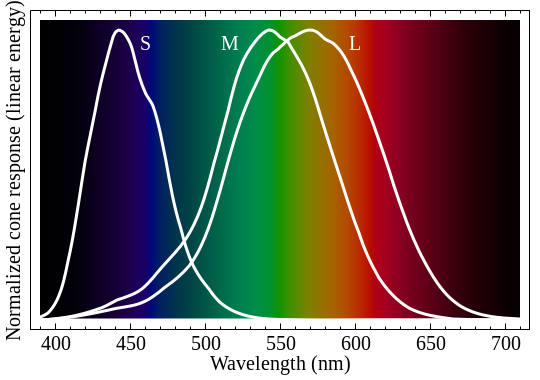

- Our eyes have color trichromatic vision with sensors for red, green, and blue wavelengths. We can percieve colors between these wavelengths, but we can’t determine what combination of stimulating frequencies contribute to that perception.

Reflective/Absorptive Color

- A reflective object reflects and absorbs all light wavelengths at different amounts.

- We perceive the reflections as a single color.

- A reflective color cannot be brighter than the lighting in any wavelength.

- We adjust our perceived color of an image based on our understanding of the lighting. The Dress

Visible EM Range

Our Eyes

- Color Sensitivity

- Able to perceive electromagnetic waves from 390 to 700nm, and can differentiate hues as close as 1-10 nm.

- Trichromatic sensors for red, green, blue.

- Contrast Sensitivity

- Have a contrast range something like 1:1000000 (20+ stops). Film cameras are 9-10, high end digital camera are a little better. A typical screen might have a true contrast ratio of 1:1000.

- Angular resolution

- Our eyes focus light through a lens, providing directional information at a resolution of about 1 arcminute or .02°. Thats about 250 dpi at one foot away, 3 pixels/mm at 1 meter, or something 6 inches wide about a mile away. We can see things much smaller than this, when they are bright, we just can’t understand their shape. For example a bright led would be easily visible a mile away in a dark environment.

- Our eyes have ~120 million rods that detect brightness.

- Our eyes have ~6.5 million cones that detect color.

- For comparison, an HD Display has 1920x1080x3 (6.2 million) LEDs. A High-end DSLR has about 45 million light sensors.

- Field of View

- ~ 160° x 175° But resolution is very center biased. Foviated rendering

- Frame Rate

- Our eyes don’t have a frame rate like a camera in which all the photo-sensors refresh in unison at a set rate. We can generally differentiate a solid light from a flickering one, up to 60+ hz.

Vision Impairment

- In the US more than 3% of of those 40 years and older are either legally blind (20/200 vision or worse, with corrective lenses) or visually impaired (20/40 or worse, with corrective lenses).

- In the US more 7% of males and .4% of females have some form of color-blindness.

Our Visual Cortex

Our Visual Cortex processes the data collected by our eyes to provide information about our environment.

- Depth perception (binocular and not)

- Persistent spacial understanding

- Pattern recognition

- Estimation of true color, accounting for lighting

Thoughts

Our understanding of color and color theory—primary colors and color wheels—is informed from the anatomy of our eye and the way our mind processes vision.

We consider a color as a single value: dark blue, pink, vivid green. We don’t think about the color of something as a little bit green, a little bit blue, and a lot red. We definitely don’t think of color as the sum of the many in-between wavelengths.

Sound

Sound is an audible wave of pressure that propagates through the air. Sound begins when something in contact with the air vibrates. As that thing pushes forward, it pushes the particles of air in front of it forward into the the particles of air in front of them. Making an area of higher pressure. This high pressure area pushes out in all directions, and a wave of pressure begins to propagate though the air.

This pressure wave can push on other things like microphones and our ears. Our ears are able to detect very rapid and subtle changes in this pressure. And we are then able to understand the amplitude, frequency, and even “shape” of these changes. Because we have two ears, spaced a few inches apart, we can compare what each ear hears to gain spacial information as well.

Videos

Our Ears

- Pitch Sensitivity

- We can hear pitches from 20hz to 20,000hz, and can differentiate frequencies as close as 5 cents (.15hz at Middle C). Pitches are detected by 16,000-20,000 hairs in a curled up tube, the cochlea. The hairs in the cochlea are each “tuned” to different frequencies. For reference, an 88 Key Piano ranges from A0 (27.5 hz) to C8 (4186.01 hz).

- Loudness Sensitivity

- We can detect pressure changes < 1 billionth of the atmospheric pressure. We can hear sounds 10 trillion times louder than that, at which point they start hurting.

- Timbre

- We have the ability to detect “quality” of a tone based on overtones and other information.

- Hearing Impairment

- About 15% of Americans have some hearing loss. About 8% would benefit from using hearing aids.

Our Auditory Cortex

Our Auditory Cortex processes the data collected by our ears to provide information about our environment.

- By comparing the amplitude, timing, and phase of audio information from each ear we can perceive the spatial source of sounds.

- Our spacial information about sound is less reliable than that of sight.

- We are able to listen to one source in a noise environment: a specific person at a cocktail party, an instrument in a symphony.

- Very, very good temporal pattern detection. We notice when the beat is off.

- Very good interval comparison. We notice when a note is off key.

- Tightly coupled to language

Vision vs. Hearing

Our visual and auditory sensory systems prioritize different types of information.

| Vision | Hearing |

|---|---|

| Two Eyes | Two Ears |

| EM | Air Pressure |

| 120 million sensors each | ~18k sensors each |

| 1 + 3 frequency responses | thousands of frequency responses |

| Lens + spatially arranged | No Lens + not spatially arranged |

| 160° FOV | 360° FOV |

| Contrast 1:1,000,000 | Contrast 1:10,000,000,000 |

| great at spatial understanding | great at spectral understanding |

We see a single color at a very specific point. We hear chords from a general direction.

Vision + Hearing

Our visual and auditory sensory systems support one another.

Key Takeaways

- Computers are physical machines.

- Computers can interface with the physical environment.

- Data flows through our physical environment.

- Our eyes and ears are sensors.

- They sense only a small amount of the data flowing through our environment.

- Our eyes and ears are significantly different in structure.

- The types of data gathered by our eyes and ears are rooted in their structure.

- Our eyes and ears are just sensors, they provide data.

- Our brain is needed to process this data and provide information.

- Our brain’s visual and auditory data processing is powerful and valuable.

- We can take advantage of this power by creating visual and auditory form.